Dr. Greg Law, Founder/CTO, Undo

Maurice Wilkes was the first person to write code to solve a real problem on a machine resembling what we think of as a modern computer - an electronic computer that executed stored programmes from memory. He was the world’s first programmer.

He was trying to resolve a biology problem - a forerunner of genome sequencing - and realised that one of these newfangled computers might do it. In his memoir, he recalls the moment when he realised that a good part of the remainder of his life was going to be spent finding errors in his own programmes.

Humans are just not very good at writing software; and that process of just getting it to work, this process of debugging (we sometimes call it ‘inner loop debugging’) completely dominates the software development process.

Let me give you a real example. Cadence, a Silicon Valley Electronic Design Automation (EDA) firm, had their simulation software running with one of their large chip designer customers. At any one time, thousands of regression tests were running in the background and Cadence’s software would crash in 1 run out of about 300, after about 8 hours of execution. And every time it was different. The EDA firm had engineers on their customer's site for three months trying to get to the bottom of the problem.

But debugging doesn’t have to be so extreme. It could just be that I have written some code which hasn’t worked the first time around and I end up spending an hour in the afternoon to get it going. This is inner loop debugging; the Cadence example is outer loop debugging.

Bugs take a variable length of time to solve, be it inner or outer loop. These examples are at opposite ends of the spectrum. Across all the bugs we encounter, we spend a lot of time solving them.

Debuggability is the limiting factor in software development. This is something that we just kind of accept - debugging dominates any programmer’s life so much so that it is part of the job.

Debugging is the process we go through to answer one simple question: what happened? Usually that translates (many times over) to: what was the previous state of my program?

We have all used debuggers and they are kind of useful, but they don’t really help us answer that key question of “what happened?” They allow us to stop a program, peer around, run it forward maybe a line, run it forward maybe to a breakpoint. It’s a bit like watching a film with a finger on the pause button. We get to look at a still picture, then allow the debugger to run possibly billions of instructions until the next still frame. We kind of get a feel for what’s going on, trying to extract clues as we step forward through the program’s execution. But there’s no step back, no skip to a given chapter.

What if we could control time as well? What if we could not only step the program forward, but step the program back a line, back 100 lines, or go back a minute?

What if we could just see exactly what happened, rather than trying to figure it out?

This is a much more direct way to get the answer. There are research papers going back to the 1970's and countless PhDs are trying to do this kind of thing. You don’t have to think about it too hard to understand that this is a really powerful solution if you can make it work.

Thankfully, this concept is no longer an academic one: Software Failure Replay is already in use across a number of industries where complex software is being developed, including telecoms/networking, EDA, data management, etc.

Software Failure Replay completely captures a failing process. It’s a bit like a CCTV recording. Once you have the recording, we can go back to literally any instruction that executed and see any register state - on demand and with minimal overhead.

Remember the case of the EDA software issue I wrote about a few paragraphs earlier? After spending three months trying to root cause a memory corruption issue, the engineers tried Software Failure Replay. They left a bunch of tests running over the weekend with the Record engine enabled. On the following Monday, sure enough a couple of tests had failed… but now they had the recording file.

They took one of the recordings of the test failures, loaded it up in the Replay engine, set a watchpoint on the corrupted memory location, and ran back for three hours (as that was how far in the past the actual corruption happened). Once it had run back to the root of the problem, they not only diagnosed the bug but also fixed the code within a matter of hours.

They had gone from three months of making no progress, to having the defect fixed in hours. There are lots of cases like these. However, the technology has also proven to routinely turn an afternoon debugging session into 10-minutes sessions. Although less glamorous, it actually turns out to have a huge impact on overall productivity.

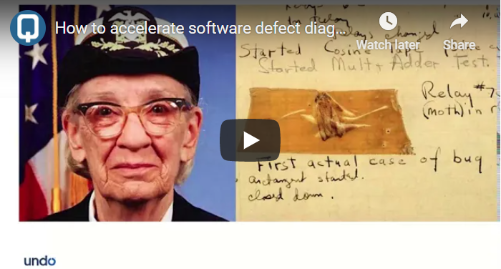

Want to see how Software Failure Replay looks like in practice? I illustrate how to resolve a data structure corruption issue in this on-demand CISQ webinar. (demo starts at 13:28 min)